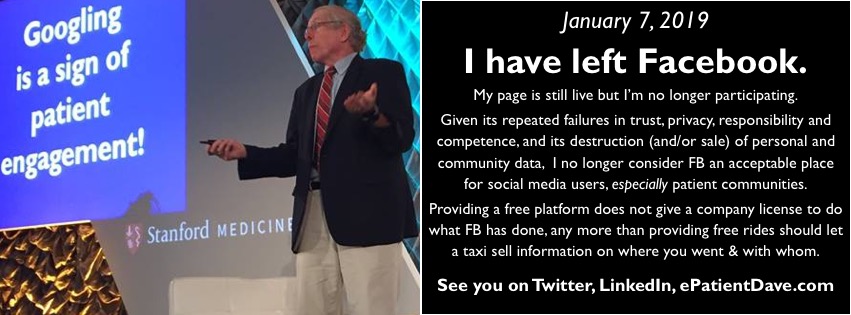

I have stopped participating on Facebook. I’m leaving my account live (so that my post about why I’m leaving is visible), but everything will be shut off as much as possible, and the rest will be ignored. No Messenger, no more posts on my timeline, no notifications, no tagging, etc.

I’ll be spending more time on LinkedIn and Twitter. I hope you’ll follow those pages, or use the Subscribe form on the right side of my blog page.

This isn’t an easy decision because it will be harder to keep in touch with everyone in my life, not least my family (including famous daughter and grandchild) and the many friends I’ve made in my travels. But I’ve decided we must stand up.

The rest of this post explains why; if you don’t need that info, ignore it – but please keep in touch.

I’ve concluded that Facebook is incompetent about security of our data and irresponsible about the side effects of what happens when marketers, bots, and monitors interact with the site. It allows (or fails to stop) unscrupulous behavior by unseen marketers, behind the scenes or even posing as members of patient groups.

In my opinion none of us should entrust a single bit of patient information to Facebook. Of course it’s up to you: you may want to stay, all things considered, and I support you in doing what you want. But be aware of what could be going on behind the curtain.

I’ll discuss three areas that have multiple evidence points.

1. Covert marketing within patient groups

For most of us, if someone is secretly selling on Facebook it may be merely annoying. But in some cases these people have done really bad things with patient groups.

- Facebook has secretly let marketers scrape (copy from the screen) the names of members of private (“closed”) cancer groups, including breast cancer (see Facebook allowed third party marketers to download names of people in private groups), and then said they didn’t realize it was happening, even though the marketers were using a browser extension provided by FB.

- Or this revolting story subtitled Huge groups of vulnerable people looking for help are a rehab marketer’s dream, about an addiction support group containing members who are covert marketers … and one woman who got banned from the group for calling them on it, leaving her without the support (for her troubled son) that led her to join.

Treating people this way when they have any kind of medical or mental health problem is flat-out predatory, and I believe patients should be aware that they might want to stay away. I would. (I won’t say “should stay away” because that’s a personal choice. But I won’t stand for it being in dark alleys.)

Go to a legitimate, above-board patient site like SmartPatients.com or PatientsLikeMe or Inspire.com. They’re free, too! But, update: on Twitter, user Anita Figueroas said “sites like [Inspire] limit our outreach (links to our website aren’t allowed).”

2. Incompetence at security – and burying the evidence

An especially bad case of skullduggery and self-interest happened last July, when Wall Street was rattling swords at Facebook because FB had not been truthful to investors about the Cambridge Analytica election scandal: SEC Probes Why Facebook Didn’t Warn Sooner on Privacy Lapse (Wall Street Journal). (It’s one thing to mess with the public, but mess with Wall Street and s4!t gets serious, eh?)

Coincidentally, right when that happened, a thriving private FB #MeToo group of 15,000 sexual abuse survivors got hacked by trolls (see the Wired article How a Facebook group for sexual assault survivors became a tool for harassment), who proceeded to post vicious sexual images to certain members, privately or publicly in that group. When the admins reported it to FB, FB didn’t investigate – without warning they ERASED THE WHOLE GROUP, destroying all the evidence – not to mention all the group’s past conversations, networks of contacts, etc.

The company has gone too far, to the point where it’s time to walk away.

3. Incompetence and haphazard management of hate speech issues

Clearly, after the scandals around the 2016 elections and alt-right hate problems, Facebook needed to do something about all the fraudulent accounts and hate speech they were allowing. But rather than figuring out an approach that could have been costly – actually being careful about rules – they went for cheap and sloppy, because “careful” ain’t cheap. The result has been so dishearteningly inept that it helped nail the coffin on whether I could tolerate being there.

It’s summed up in two articles about how they’re clumsily handling censorship vs freedom of speech – a very delicate issue in these times, which they’re trying to handle by sending disorganized rules created by random people everywhere to cheap call center personnel, in the form of PowerPoint slides!

- June 2017: Facebook’s Secret Censorship Rules Protect White Men From Hate Speech But Not Black Children (Yes it literally says that; read it. An interesting contrast to the perception that Silicon Valley is reflexively left-wing.)

- Nov 2018, Rolling Stone: “Who will fix FB?” including a sample story of a guy whose legit website got banned from FB as collateral damage during a sweep intended to erase frauds … it seems nobody checked whether the rules were working as intended! That is WICKED bad in a software company. Blind, unthinking execution of rules written by someone somewhere, carried out (the article suspects) by workers in low-priced overseas call centers. And nobody checking.

The decision to actually leave Facebook started in mid-December. (It had come up several times, but throughout 2018 it got worse and worse.) Then, right after Christmas this came out:

- 12/27/18, NYTimes: an employee leaked the 1400 page rulebook FB’s censors are supposed to use. Inside Facebook’s Secret Rulebook for Global Political Speech

The leaker said FB “was exercising too much power, with too little oversight — and making too many mistakes.” Mistakes like that can cause harm; harm that happens entirely because the company is being reckless.

Beware of technology carelessly used

in the pursuit of large-scale automated profits

A basic reason why business loves automation is that human intervention is costly. “It doesn’t scale,” as they say. (Specifically, to do more of it, you have to hire and train more people, pay them benefits, etc. Silicon Valley likes things you can program into a system and sell to 100 or six billion people at the same cost.)

I love automation as much as anyone (it’s been my whole career), but there are limits: you have to check that the robots aren’t going insane. Especially in cases where harm can result. Like driverless cars. Or healthcare.

Some things truly require human judgment.

Other big tech companies are getting too big and irresponsible for their britches – e.g. Amazon wants to sell its “Rekognition” face recognition software to the TSA, even though (USA Today, July) it misidentified 28 members of Congress in an ACLU test. The software said those 28 faces matched a database of arrest photos!

Are you eager to walk through that software for TSA, at your next flight? Especially if you’re not Caucasian: “Nearly 40 percent of Rekognition’s false matches in our test were of people of color, even though they make up only 20 percent of Congress.” [ACLU]

Note: TSA hasn’t bought Rekognition yet, but USA Today says local law enforcement agencies already have. Do they have I.T. experts who can adjust and evaluate such new technology??

You should have exactly this kind of worry about anyone who’s touting some amazing “AI” (artificial intelligence) as the next miracle. AI is powerful and beginning to do great things – but it must be monitored and checked for unintended harms, or the robots truly will do large-scale harm in our civilization.

Some of the investor-oriented tweets and posts I’ve seen don’t care a thing about whether the stuff is accurate – “Hey, it’s NEW! It’s gonna be great! Don’t miss out – buy some today!”

Not me – not unless a thinking human is doing a sanity check on whether it gives accurate answers.

And that’s exactly what’s missing in Facebook’s irresponsible management of group security, covert marketers, and censorship vs free speech vs hate speech.

It’s often said that with great power comes great responsibility. Actions like FB and Amazon’s go way too far, and the last straw to me was the increasingly clear picture that Facebook truly isn’t going to let the risk of harm to others slow them down.

That would be irresponsible in any walk of life; in criminal law it’s called negligence. In healthcare (where I try to lead) it especially crosses the line into “must not be tolerated” territory.

So, Facebook: as they say on Shark Tank: I’m out.

Additional reading:

- Ars Technica, March 2018: Facebook scraped call, text message data for years from Android phones

- “The company also writes that it never sells the data and that users are in control of the data uploaded to Facebook. This “fact check” contradicts several details Ars found in analysis of Facebook data downloads and testimony from users who provided the data.”

- USA Today, April 2018: How Facebook can have your data even if you’re not on Facebook (Did you know FB collects data – totally without permission – on people who never even signed up for FB??)

- Washington Post, Nov 2018: Something really is wrong on the Internet. We should be more worried.

Hope to find my way as I neither Twitter or LinkIn. Have enjoyed your ideas since CompuServe days.

I understand, Nancylynn. Without a doubt Facebook knows that we’ve all gotten accustomed to connecting with each other easily and for free – so they believe they can do anything they want with us.

It’s a classic moral question: when you (and people you care about) are getting a free ride on something you value, how much do you put up with before you say “I’m out”? The past year’s news made me reach that point. :-( (That’s why I included all the detail. I didn’t decide this lightly.)

I honestly suspect Facebook (and importantly its investors) think they can get away with anything in exchange for the free ride. In this case, though, it’s not just me that’s affected – they’re being terribly (and sometimes disgustingly) predatory about people who have a real problem. I’ve had it.

What do you think – if they were completely open and honest about what they’re doing, would everyone be okay with that? Perhaps, but in that case why be sneaky, and deny it, to Congress and everyone?

Here is an editorial on this issue: Social Media in Health Care: Time for Transparent Privacy Policies and Consent for Data Use and Disclosure

https://www.thieme-connect.com/products/ejournals/abstract/10.1055/s-0038-1676332

Thank you, Christoph. (And hi, co-author Carolyn – I believe we’ve met, yes?)

Christoph, the article’s not open access – will we fully get the point if we just read the abstract? Some of the concepts you introduce are pretty arcane compared to the thoughts of ordinary daily users. And I sure would like to know what’s behind each footnote.

Yes, Dave, we met at AMIA, HDP, and possibly elsewhere. Small world!

The full text of the editorial is open at https://www.thieme-connect.com/products/ejournals/abstract/10.1055/s-0038-1676332

Best regards for the new year!

Oh, the text on the abstract page IS the full text?? When I clicked the Full Text tab it asked for a login. Okay, got it.

Now to start chewing on the deep stuff …

The twitter link is wrong/broken. Remove the .com at the end. (https://twitter.com/epatientdave, not https://twitter.com/epatientdave.com)

Bravo. I run a group on Facebook and appreciate the info.

Oh, and yes, that is the full text (other than 2 questions and answers to confirm comprehension) https://sci-hub.tw/10.1055/s-0038-1676332 confirms it.

Yow, that’s an embarrassing typo, George – thanks for the catch.

I’m guessing you’ll read the other FB posts here, and are up on the news in general… if there are specific things you want to know about, ask and I’ll look around.

For those staying on FB who want to know more about data settings, thanks to Twitter pal @SusanLinOT for this June Washington Post article:

Hands off my data! 15 default privacy settings you should change right now.

“Say no to defaults. A clickable guide to fixing the complicated privacy settings from Facebook, Google, Amazon, Microsoft and Apple.”

Dave, I’d like to add Mayo Clinic Connect https://connect.mayoclinic.org to your list of reputable online community spaces for patients and caregivers.

Connect is an open forum for all (you don’t have to be a Mayo patient). To register, people only need an email (for notifications), @username and password. People can choose to use a pseudonym or real name. Further information is voluntary. People can disclose as much or as little as they feel comfortable with.

I explain why and how Connect is moderated and managed with respect to misbehavior, bad actors and misinformation in this blog post: https://socialmedia.mayoclinic.org/2018/09/13/dont-let-these-3-common-fears-stop-you-from-creating-a-vibrant-patient-community/

I hope you’ll add Connect to your list and am happy to answer any questions people might have about it.

While this article is about breaches and covert (bad) access to people’s information, we all know that the data and discussions that patients share in online communities is gold and can have a profound effect on people’s health and on health care. Working with the magic that happens in patients communities fuels me.

On Jan 17 at 2pm CST, I’m giving a free webinar that I’m super excited about. I hope you’ll join me.

How Mayo Clinic’s Patient Community Changes Health Care and Advances Science

https://socialmedia.mayoclinic.org/webinar/how-mayo-clinics-patient-community-changes-health-care-and-advances-science/

Happy New Year! I also left facebook last month for the same reasons. They are more worried about the bottom line than about their users. If you don’t know what the product is, then you are the product. I was tired of being the product…

Keep fighting the good fight!

I understand the reason and I agree with the well articulated criticism of Facebook, but I disagree with the decision to leave. Leaving will have zero impact on Facebook, but will deprive many, many patients and clinicians of learning from you.

Thanks for bringing your tweeted response here, Peter.

I have no delusions about Zuck or anyone else at FB saying “Oh no! e-Patient Dave is pissed – we better run!” They’re not my intended audience.

I hope, first, to stir discussion of the cited issues. That includes people thinking about peer health advice on social media, but also the more general public.

Second, I’m not sure all that many patients and clinicians have been reading what I say on FB. I’m not privy to those numbers, since I’m not on a “business page” there. Of course I’ve always tried to teach there, but I don’t know how big an impact I’ve had. (My posts there that got a lot of likes and responses were always the goofy giggles.)

There’s another level of this, though, that I think has more long term import: as you know, after the 2016 election (with all the talk of skewed content in our social media feeds), I concluded the only “grown-up” solution was to take responsibility ourselves for where we get information, and not just see what drifts by. As you know, I created my own Twitter list that I called credible publications across the spectrum.

There’s a further aspect, too – I didn’t say it in the post but it already came up in another comment this morning, so maybe it’s more central than I realized: your point that you and I want FB’s “microphone,” to reach everyone we can, implies the power they’ve achieved by being “the place where everyone is,” which I strongly suspect makes them think they can do anything they want to us, because no matter what they do, we ain’t gonna leave. (See what I mean?)

All things considered I made the choice to say there is a limit to how much misuse I’ll tolerate.

In the context of the post-election news realizations, I think it’s essential for us as a civilization to stop saying “Whatever” to whatever crap floats by in our feeds. We know there’s garbage, and the remedy is not to shrug, it’s to say “Not good enough” and look elsewhere.

p.s. See why I asked you to bring the discussion here instead of little ol’ Twitter? :-)

I suppose I should also say, perhaps above all, I want to draw attention to the value of, and needs of, the people in (medical) trouble who seek help by banding together on social media!

No solutions will be developed if people are generally not aware of those needs, and aware of the value of what they find there.

Perhaps it’s when needs are spotlighted, and abuse of those needs is spotlighted, that it becomes clear how immoral and unacceptable the abuse is.

I deleted Facebook in 2016. The funny thing is that no patient groups discussed any of this. Most of them appear to be run by content marketers and influencers. If you really want to see how much data they ahve access to , go and read up on Palantir. Corporations like Palantir and Optum seek out keywords, and scrape data. They have full acceses to Facebook. The information is used to not only do deceptive marketing but to create counter narratives so peope don’t question anything. The owner of Palantir, Peter Thiel believes that even marketing psuedo scince to desperate patients is a good thing, becuase there is a profit to be made.

Keep your eyes open people! It does not look liek there will be any regualtions any time soon. Zuck got busted paying kids to turn over all of their FB data, and there was no reaction.