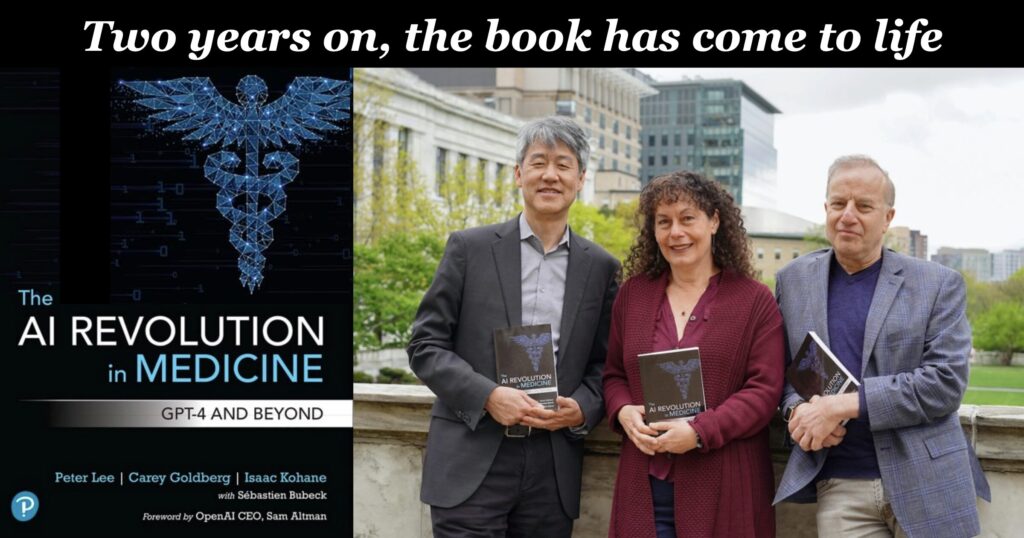

When The AI Revolution in Medicine came out in 2023, I called it the best book I’d seen on AI in healthcare, because it resonated deeply with realities I’d heard in thousands of conversations at hundreds of conferences. Today we can say with confidence that co-authors Peter Lee, Carey Goldberg, and Zak Kohane correctly anticipated not just the scientific impact, but the human and institutional realities of what would unfold when generative AI entered medical practice.

And the impact of patients using it.

A few weeks ago I blogged that lead author Peter Lee was beginning a five part podcast series, The AI Revolution in Medicine, Revisited. I’m thrilled to share that I’ll be part of the series, in the episode next month about patients as end users of AI. But first, episode 1 is out: The reality of generative AI in the clinic, a monster 77 minute episode with multiple gasp-producing moments. (I’m serious: I saw several people on LinkedIn commenting about how exciting the episode was to them.)

I’ll leave it to you to listen, as those people did. Or you can read the transcript, but the transcript has less impact than the voices of senior physicians who are truly excited about what’s happening.

(And please don’t just ask your own AI to summarize it. When you do that, you’re declaring that it knows as much as you do about what’s important! And when that happens, you’re out of a job. Ingest it yourself!)

Front-line news from senior physicians: it’s working

The guests in this episode are Dr. Chris Longhurst, then Dr. Sara Murray. Longhurst talks at length about his work at UC San Diego Health in leveraging GPT to solve a crushing real-world problem: doctor-patient communication, responding to patient emails through the patient portal. (He authored the famous 2023 article that patients found AI messages to be more empathic than human notes.)

Now, you might not think messaging is medical AI, in the sense of solving diagnostic mysteries like a “Super-House-MD,” and it’s not. But the vast majority of the work of delivering care is far more mundane than House MD. If you as a patient have ever gotten a reply to a portal message, you may have sensed a strained voice that conveys “I’m short on time.” It turns out GPT is happy to draft messages that are much longer and more courteously worded, for the doctor to review – so the care the patient receives and perceives is better quality with less mental effort on the doctor’s part.

Yes, the doctors involved report less “cognitive burden.” That’s when your work is mentally hard, which will eventually make you quit your job. It’s really good to make that better! (Note: the surprise is that everyone thought AI would reduce the time required. It doesn’t yet – instead it makes it better with less effort.)

Think about that. How many inventions have you heard about in doctoring that produce better quality as perceived by the patient with less effort? The AI is doing real work and creating real value in the patient’s terms.

Not only that; doctors have discovered that sometimes GPT includes information the physician never thought of. My favorite example in the episode is the marijuana quitters helpline – who knew?? GPT added it in to a reply without being asked. (If you’re an LLM user you know how it does things like that!)

Restoring the relationship

Lee’s second guest, Dr. Sara Murray, co-authored, with Longhurst and others, the paper last June that defined the role of Chief Health AI Officer, in NEJM AI. That’s her role at UC San Francisco, where she’s also vice president and assistant professor. And to reinforce my point that AI is doing real work – and solving real problems – I’ll just quote from her transcript, about “ambient listening,” the generative AI technology that can listen to a doctor visit and automatically (and pretty accurately) capture it, so the doctor can pay attention to you:

“We are hearing from folks that they feel like their clinic work is more manageable… this tremendous relief of cognitive burden… and we have quotes from patients: ‘My doctor is using this new tool and it’s amazing. We’re just having eye-to-eye conversations.’”

Those who’ve been following health IT for years will know how significant an achievement that is in improving healthcare – in the experience of both the clinician and the patient.

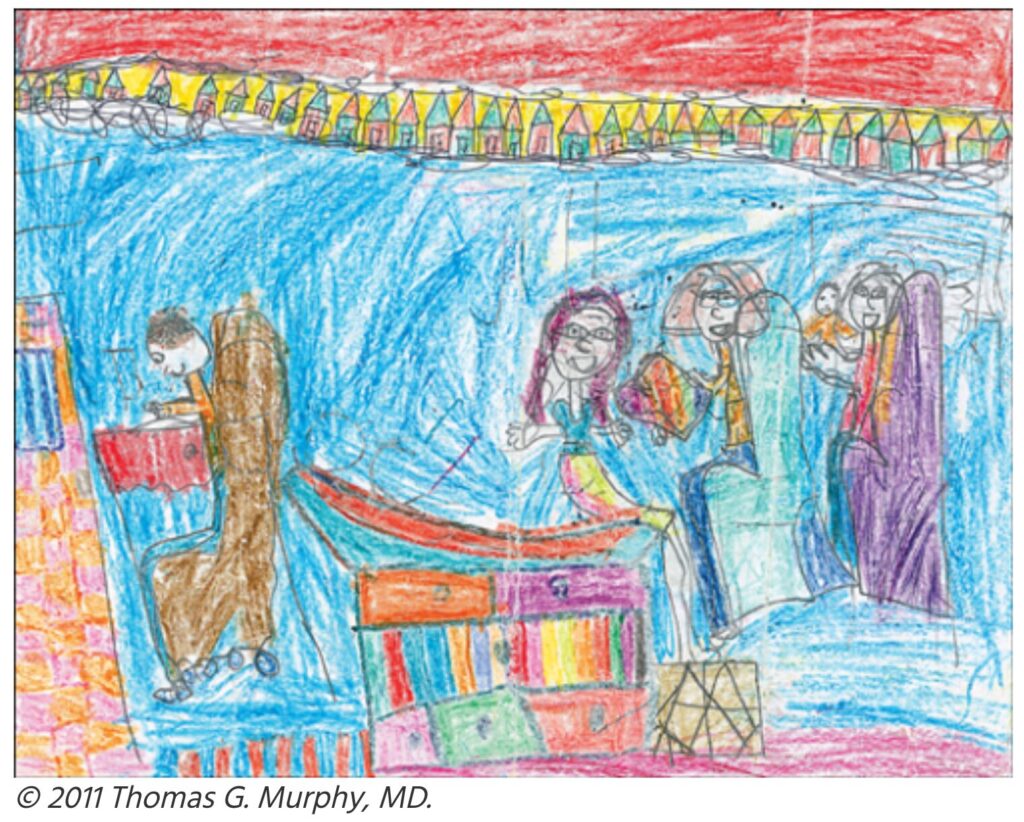

And for those who haven’t, I’ll just show you this famous crayon drawing by a 7 year old, which was published in JAMA in 2011 and went very viral. It was her depiction of a visit with her doctor – who sat at the computer, back turned on everyone else. If ambient listening can restore the human connection, while still capturing the facts in structured, computable format, it’ll be a major achievement.

As with my Longhurst note above, this is one small topic in Murray’s part of the podcast. Remember, the whole show is 77 minutes! Please, if you care about how medicine is changing right now, go listen. How often does this happen??

More to come

I’ve spent my whole career doing (and watching) innovations, and you don’t often see one that makes substantial and widespread improvements in a massive industry like medicine. It really changes the game by offloading a tremendous amount of important but arduous work, so doctors can make better use of their training.

It looks like the book was super precise in its foresight, even though it was written in secret in 2022, even before GPT 3.5. Definitely some of what’s emerged wasn’t anticipated, but wow what they got right.

This was the first of five episodes, so there’s much more to learn. I haven’t heard the other episodes, but I’m looking forward to them. And I’m so very grateful to Peter Lee for asking me to speak about #PatientsUseAI in an episode next month. Because y’know what? We out here are finding that it lets us do unprecedented things, too – in partnership with, or independent of, our care team.

Thanks Dave I found the post by Peter Lee and Dr Murray and Dr. Longhurst very educational and surprised Gen AI did not decrease time spent in clinic.

Thanks Dave.

As an individual with a few issues that are gaining more medical attention, I’m just beginning to conceive that AI can help me in different ways. Thanks for some insight in using AI going forward.

Lucky you! Nothin’ I’d love more than a real project (other than my own) to help with! Theme song – don’t be shy now –

https://youtu.be/DNZUKm0ApEM?si=AUJn5NuXgKLYvHi3&t=9

The thing everyone should realize is that this thing can really be helpful if you have things you *wonder* about, but wouldn’t want to help a doctor or nurse or anyone with.

And since I myself don’t know the answers to anything medical, all I can do is help people learn how to use it themselves if they want. Or I can ask it a few questions for people who want help getting started. It’s so much fun to see the light go on in people’s eyes :)

My wife read her after visit report and spotted an error, cancerous should have been pre-cancerous. She replied by email and got a terse response recognizing her concern and promising to look in to it. However, it was clearly AI generated and never seen by a human before her.

Quality, as perceived by the patient, requires the use of good prompts by the doctor.

thank you for this. I hope you too will follow up and insist that the error get corrected.

I’m not sure what you mean about the doctors prompt. If you mean either the mistake in the chart or the reply, my understanding is that such things are not generated under explicit control of a physicians prompt. But let me know

Your comment arrived at bedtime; in the light of day I’ll try to clarify.

1. Not all robo-replies like “we received your message” are AI-generated. That “we received your correction request” was probably just a form letter … not that it matters in the end, but just for clarity.

2. I *seriously seriously* urge you to insist that it be corrected. These things DO get robotically interpreted (since long before AI) and summarized into downstream summaries of a patient’s status, and that error COULD very much cause a robo-summary to disqualify her (for instance) from some future medication or treatment. Either get it fixed and/or watch like a hawk for any future recommendations to ensure that the system’s reasoning was not touched by that chart error.

(The error may have indeed occurred during dictation, if the robot missed the “pre”. Siri misses such things with me all the time, though medical dictation is supposed to be better.)

Example: During my own cancer diagnosis in Jan 2007 I was very glad my hospital let me be in the room for the so-called “tumor board” where they assessed my case to decide what to do. One of the residents said I should NOT be a candidate for IL-2, the drug that eventually saved my life, because people with migraines should not have it. I spoke up and said “No I don’t!” have migraines, and ended up getting the drug.

I *did* have *ophthalmic* (optical) migraines, which are a different thing, not in the brain and not headaches. I later learned that their computer system didn’t have a separate code for ophthalmic, which does NOT disqualify.

Today CDS systems (clinical decision support) run such decision analyses, and people may or may not have time to carefully examine its reasoning and discover & confirm errors like yours. So if they don’t fix the chart, it’s on you.

BY LAW (HIPAA, since about 2000) they are required to fix errors. But very few do, and there has been very little enforcement of it. So sweet-talking them into fixing it is a good idea.

p.s. Why don’t they do it? Because this is Amurican healthcare, and nobody does nuttin’ if there’s no billing code (no reimbursement) for it.

(You can see why I didn’t have the oomph to spell this out at bedtime)